Mac supports Data Strategy Professionals with newsletter writing, course development, and research into Data Management trends.

Importance of AI Governance

Understanding the systems for mitigating AI risks and maximizing benefits. AI Governance Frameworks Comparison post 4 of 6.

Author: Mac Jordan | Post Date: Feb 28, 2025 | Last Update: Mar 5, 2025 | Related Posts

Drawing from the definitions of various organizations, AI Governance refers to the set of policies and mechanisms that help ensure AI systems remain ethical and safe throughout the AI lifecycle. AI Governance is a somewhat broad term encompassing the development of AI principles and their practical implementation with the goal both increasing benefits and decreasing risks related to AI systems.1, 2, 3, 4, 5

Gartner highlights that AI Governance is "currently characterized by an absence of agreed-upon standards or regulations."7 The Responsible AI Institute describes AI Governance as "an emergent but rapidly evolving field."8 Significant technical AI developments and the proliferation of AI applications over the last 10 years have rapidly developed the field of AI Governance. This is especially true of the past two years given the emergence of generative AI (gen AI).

Contents

- Introduction to AI Governance

- Priority for Large Businesses

- Types of AI Governance

- Roles and Responsibilities

- Tasks in Practice

- AIGA Model Case Study

- CEOs

- Data Management and Data Governance

- Data Quality and Data Security

- Oversight Groups

- Business Benefits

- Incentives and Responsibility are Aligned

- Increased ROI

- Building Trust

- Regulatory Compliance

- Adoption Rates and Barriers

- Rates of Business Adoption

- Deployment and Operation Barriers

- Planning and Resource Barriers

- Discussion

- Conclusion

Introduction to AI Governance

Priority for Large Businesses

Many businesses will understandably hesitate to implement AI Governance. Keeping track of best practices can be challenging; it impacts various parts of a business, and implementing and operating requires new expertise. It could be tempting to start with "informal governance" – using existing governance systems – with a plan to implement ad hoc systems as specific risks arise.1

However, in addition to encouragement from governments, implementing comprehensive, purpose-built organizational AI Governance frameworks as part of the AI adoption process has wide support among large businesses.5 S&P Global argues for "robust, flexible, and adaptable governance frameworks."11 At the same time, BCG claims that robust AI Governance is how "organizations [can] ensure that they can deliver the benefits of AI while mitigating the risks."12

The AI Governance frameworks produced by the three largest cloud vendors similarly emphasizes the importance of AI Governance:

- Microsoft's Responsible AI Standard: recognizing that AI Governance lags behind technological risks, Microsoft developed its framework to guide product teams; they advocate for "principled and actionable AI Governance norms" through cross-sector collaboration13

- AWS' Cloud Adoption Framework for Artificial Intelligence, Machine Learning, and Generative AI: "AI Governance... is instrumental in building trust, enabling the deployment of AI technologies at scale, and overcoming challenges to drive business transformation and growth;" AWS emphasizes that AI Governance frameworks create consistent practices for managing risks, ethics, data quality, and regulatory compliance14

- Google's Secure AI Framework: Google has established AI Governance policies covering responsible principles, safety, and security; their framework guides organizations in implementing "security standards for building and deploying AI responsibly"15

All businesses that have, are currently using, or are considering adopting AI would benefit from learning more about AI Governance frameworks and how to implement them to bolster risk mitigation and value creation efforts related to AI.

Types of AI Governance

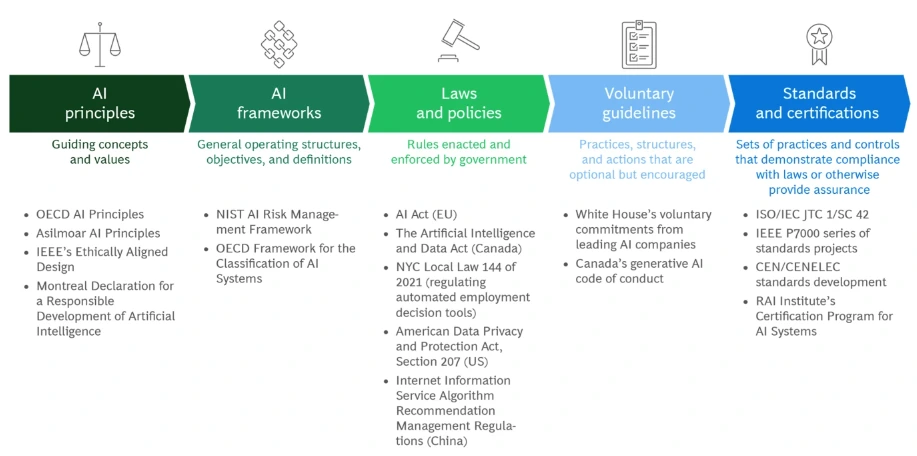

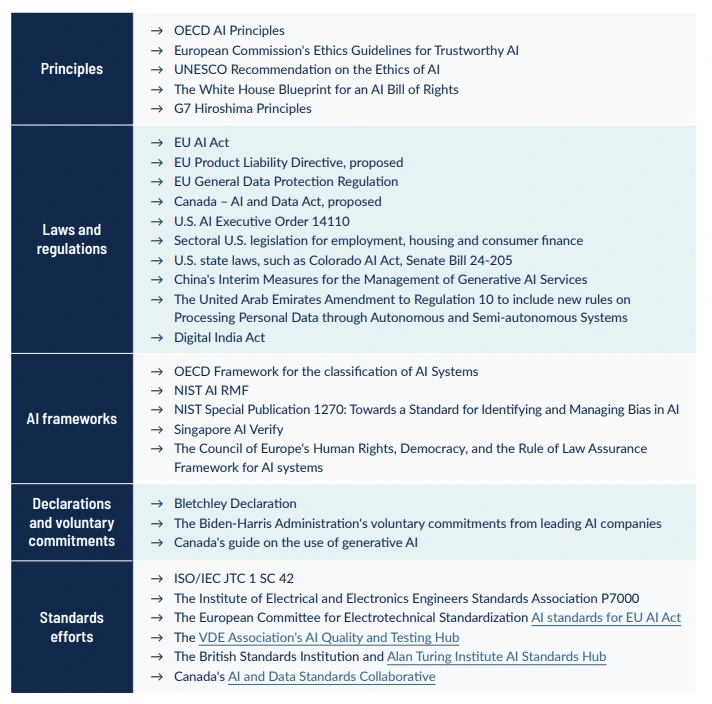

Multiple classification systems currently exist to categorize different types of AI Governance. We've found the taxonomies proposed in the separate collaborations of BCG and RAII (Figure 3.1) and IAPP and FTI Consulting (Figure 3.2) to be the most useful descriptions of the current AI Governance field.2, 16 These taxonomies roughly converge on five primary types of AI Governance, complete with specific examples.

AI principles, laws and regulations, and frameworks are likely the types of AI Governance most relevant to businesses. AI principles are necessary for businesses to define their ethics and safety-focused objectives for AI. See what some large businesses experienced in a pilot test of Australia's "AI Ethics Principles."17 Understanding AI laws and regulations is necessary for businesses to maintain legal compliance. Convergence Analysis's "2024 State of the AI Regulatory Landscape" offers a valuable, up-to-date overview.18

We define an AI Governance framework as a document outlining formal policies and procedures for practically implementing AI principles and maintaining regulatory compliance. Businesses interested in improving AI Governance by learning from or integrating existing AI Governance frameworks will benefit from reviewing the list of AI Governance framework examples in Figure 3.2 as a starting point.

Roles and Responsibilities

Tasks in Practice

An AI Governance framework requires that a business implements objective-aligned policies and procedures for governing AI across the AI lifecycle. Businesses can ideally develop policies and procedures explicitly for AI Governance, but Singapore AI proposes that they can also adapt existing governance structures to accommodate AI.19 For example, AI risk mitigation processes can be developed within a business's existing risk management structure.

While an AI's specific features and intended use cases will influence what governance mechanisms a business employs to govern it, there are many broad mechanisms that businesses are likely to practice along the AI lifecycle. Singapore AI outlines risk control, maintenance, monitoring, documentation, review measures, and reviews of how stakeholders communicate as common types of operational tasks.

Although before the proliferation of gen AI, survey results from IDC in 2022 offer more granular insight into what AI Governance capabilities businesses think are critical. Dashboards to assess, monitor, and drive timely actions/risk management (61.7%), multi-persona collaboration (60%), and support governance for third-party models and applications (60%) were the three most commonly cited critical capabilities. However, of the nine capabilities surveyed, reports for compliance (43.1%) were the lowest, indicating that many businesses consider a wide range of capabilities critical.4

Additionally, a 2023 research from IAPP-EY highlights which business functions are currently being or expected to be tasked with AI Governance responsibilities. Among those functions with primary responsibility, information technology (19%), privacy (16%), legal and compliance (14%), and data governance (11%) were the most commonly involved. Meanwhile, the proportion of business functions with secondary responsibility for AI Governance is far higher. Privacy (57%), legal and compliance (55%), security (50%), and information technology (46%) were the functions that at least 35% of respondents indicated involvement with AI Governance.20

Taking a bigger picture view, the OECD's "Tools for Trustworthy AI" report provides a taxonomy of the types of tools involved in implementing AI principles. Here, tools are defined as "instruments and structured methods that can be leveraged by others to facilitate their implementation of the AI Principles." These give a broader context for what deployment requires in addition to the operational system.21

AIGA Model Case Study

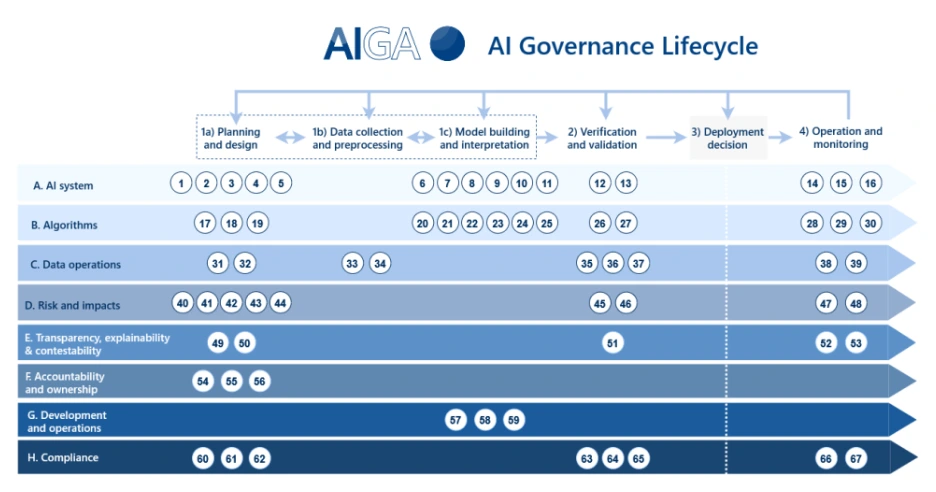

In addition to understanding the content of AI Governance frameworks as documents, it's important to understand how this framework operates as a comprehensive system. As shown in Figure 3.3, the AI Governance and Auditing (AIGA) consortium, coordinated by the University of Turku, provides a model for how an AI Governance system that complies with the EU AI Act could operate.5, 22 Although this model primarily caters to AI developers, it could help businesses understand what their AI Governance could involve during deployment and operation.

The AIGA model outlines individual governance tasks at the intersection of different task categories, e.g., AI system, and phases along the AI lifecycle, e.g., planning and design. We are particularly interested in the tasks during the deployment and operation phases, especially those related to data operations and risk and impacts. Although this model doesn't outline any tasks for the deployment phase, many tasks occur during the operation and monitoring phase.

For data operations, data quality monitoring (task 38) and data health checks (task 39) occur during operation and monitoring. Data quality monitoring involves the AI system owner ensuring the implementation of planned data quality processes, such as disclosing standard breaches and data drift, before implementing measures to address problems. Meanwhile, the algorithm owner performs data health checks, assessing how the AI system data resources and data-related processes align with the organization's values and risk tolerance and taking appropriate measures to address any issues identified.

The diversity of tasks that AI Governance frameworks involve means that whole-organization coordination between roles is necessary for the framework to function reliably. We focus on evidence for the responsibilities and impact of key roles. These roles include CEOs who set targets and communicate cultural norms, data practitioners from policy-setting to practical implementation, and the intersecting groups that can provide practical oversight for AI programs.

CEOs

Reliable AI adoption requires that AI Governance be aligned with a business's objective and implemented throughout different business functions along the AI lifecycle. BCG and RAII determine "that responsibility for AI Governance should ultimately sit with CEOs since AI Governance includes 'issues related to customers' trust in the company's use of the technology, experimentation with AI within the organization, stakeholder interest, and regulatory risks.'"2 NIST, IBM, IDC, and TDWI echo this basic sentiment.1, 4, 23, 25

Executives, more than other roles, look to the CEO for guidance. In their guide to gen AI for CEOs, survey results from IBM show that roughly 3 times as many executives look to CEOs for guidance on AI ethics than various other roles, from Board of Directors to Risk and Compliance Officers.1 Furthermore, 80% think business leaders should be primarily accountable for AI ethics, not technology leaders. IDC notes that regulators, too, are looking to C-level executives to ensure regulatory compliance.4

CEOs are deeply involved in implementing AI Governance. Referring to the OECD's "Tools for Trustworthy AI," we find a range of procedural and educational tasks involving the CEO.21 These include setting the tone for the risk management culture, developing open communication channels, dictating lines of authority, rolling out training, and investing resources.

Furthermore, this support might need to be sustained for years. BCG estimates that businesses take an average of three years to reach high levels of responsible AI maturity.12 The EU strongly recommends appointing an AI Governance lead to oversee a framework's implementation and maintenance to help maintain progress over time.5

Hands-on CEOs seem to substantially impact AI Governance implementation. A BCG and MIT Sloan Management Review 2023 survey found that of the 1,240 respondents, only 28% of CEOs were involved in responsible AI programs. Those businesses with an involved CEO saw 58% more business benefits on average, such as accelerated innovation and long-term profitability from their program, than those without a hands-on CEO.26

Data Management and Data Governance

IAPP and FTI refer to Data Management as "a foundational element to managing all AI systems."16 At the same time, AWS asserts that "the value of [AI] systems is driven largely by the data that makes it more effective."14 Indeed, Data Management issues are among the most common barriers to AI adoption, such as the need for Data Strategy to handle high volumes of data. Although this report focuses on AI Governance during the deployment and operation lifecycle phases, data is also critical during an AI's training, making up one part of the "AI triad."49

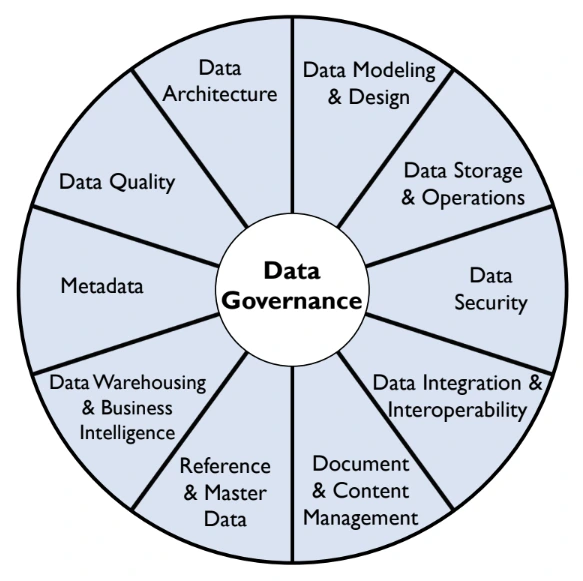

IAPP and FTI propose that Data Management considerations "include understanding what data is being used in which system; how it is collected, retained, and disposed of; if there is lawful consent to use the data; and who is responsible for ensuring the appropriate oversight." Classifying the Data Management practices using DAMA's DMBOK, as depicted in Figure 3.4, our research indicates that the strongest relationships between AI Governance and Data Management are in data governance, data quality, and data security (especially data privacy).50

The lifecycles of AI and data are deeply intertwined, and thus, so is the governance of each. Stanford's "AI Index Report 2024" emphasizes that "data governance is crucial for ensuring that the data used for training and operating AI systems is accurate, fair, and used responsibly and with consent."44

Data Governance expert Bob Seiner refers to the synthesis of Data Governance and Data Governance as AI Data Governance, where Data Governance is a subset of AI Governance. Key aspects include managing data access and securing data against breaches. Organizations enact policies and procedures for data annotation, collection, and use to meet the operational needs of AI systems responsibly. Without robust AI Data Governance, Seiner highlights that AI risks operating with "flawed, biased, or insecure data, leading to poor and potentially harmful decisions."27

TDWI's "The State of AI Readiness 2024" survey results offer insight into the demand for data practitioner roles in connection with AI Governance and some of the challenges businesses are experiencing related to AI Data Governance. Many organizations seem especially interested in hiring data engineers and upskilling business analysts to work increasingly as data scientists who also understand business.24

Lacking strong data practices has already made adopting AI challenging. TDWI found that only 20% of respondents reported a solid Data Governance program. In comparison, only 40% understand their data sources and have policies to govern different kinds of data.24 These results are consistent with McKinsey's finding that 70% of respondents struggled to define processes for Data Governance and integrating data into AI models.28

Meanwhile, less than 30% agreed they have "a company-wide Data Architecture in place for AI that can handle user growth." Businesses also need to learn how to store and govern new, often unstructured data types that AI often involves, such as PDFs and audio files.24

Data Quality and Data Security

IAPP-FTI's "AI Governance in Practice Report 2024" details AI Governance's connection to data quality and Data Security. Even during the deployment and operation phases of the AI lifecycle, data quality "impacts the quality of the outputs and performance," making it essential to creating a safe and responsible AI system. Policies and procedures should systematically measure data's accuracy, completeness, validity, and consistency across the AI lifecycle. These processes mitigate risks related to transparency and help AI perform the specific business functions assigned to it.16

Businesses can use data labels as a tool in data quality assessment and review processes. Documenting and creating inventories for data sources can support understanding where data is acquired and help carry out legal due diligence. If, for example, a business uses third-party data, it must comply with the terms of service in any data-sharing agreement and attribute the origin of the data its AI uses.16 Data operations tasks previously highlighted from the AIGA model also demonstrate the role of data quality in AI Governance.22

Data Security's relationship to AI Governance spans data protection and Data Privacy. With AI models vulnerable to hacking, data poisoning, and adversarial attacks, guarding the AI system's components is vital to maintaining Data Security. Failure to do so risks outcomes such as the "manipulation of outputs, stolen sensitive information, or interference with system operations." These, in turn, could lead to substantial financial losses, reputational damage to a business, widespread harm to others such as stolen customer funds, and physical safety concerns.16

Data quality is intimately connected to Data Security in AI Governance. For example, data poisoning occurs when malicious actors change the labels for data being used by an AI or inject their data into the dataset.16 Backdoors could also be created for future system access. NIST's "AI Risk Management Framework (AI RMF)" defines AI systems as being secure when they can "maintain confidentiality, integrity, and availability through protection mechanisms that prevent unauthorized access."23

Identifying Data Security risks is especially important when using third-party AI systems. IAPP and FTI outline several different contract terms that could be important to implement. These include maximizing the compatibility of a vendor's security practices with a business's practices, performing regular security assessments or audits to identify risks and ensure vendor compliance, and limiting vendor access to only the systems they need.16

In addition to addressing other risks from poor data quality, data inventories can be designed into an AI Governance system that handles personal data to help keep businesses accountable for Data Privacy. Privacy metadata could be used to develop a single source of truth to support regulatory compliance and, if permitted, be used as high-quality data for an AI to make inferences about a population. AI-specific inventories might also be developed.

Privacy leaders often already have a direct line to CEOs and the Board of Directors. Existing Data Privacy governance systems can be adapted to accommodate policies and procedures related to AI systems interacting with personal data. In previous research, IAPP found that 73% of their members implemented privacy-focused AI Governance policies and procedures using their existing privacy systems.16

Oversight Groups

While CEOs provide primary leadership and data practitioners make up a large proportion of workers focused on practical implementation, various groups might be involved in practically overseeing AI Governance processes. Gartner proposes that many existing review boards, privacy teams, or legal departments across businesses are overwhelmed with AI-related ethical concerns and then take ad hoc actions to address them.7

Establishing dedicated oversight groups can more reliably accommodate rising AI incidents. BCG argues that a committee of senior leaders should oversee responsible AI program development and implementation, including establishing linkages to existing corporate governance structures, such as a risk committee. For example, Mastercard's Governance Council reviews AI applications deemed high risk, with various data practitioners involved, from Data Science teams to a Chief Information Security Officer, Chief Data Officer, and Chief Privacy Officer.12

In practice, Gartner's case study on IBM offers examples of what groups might exist and how their responsibilities could intersect. IBM's overall governance strategy follows a technology ethics framework. This case study explicitly outlines IBM's operationalized use-case review process for AI ethics and identifies each group involved.7

The review process begins with a distributed Advocacy Network. It consists of employees across business units who generally promote and scale a responsible AI culture. Next, each business unit includes roles called Focal Points, which proactively identify concerns, support framework implementation and regulatory compliance, and communicate between the AI Ethics Board and each business unit.

At the third level is the AI Ethics Board, co-chaired by the global AI ethics leader from IBM Research and the chief privacy and trust officer. It consists of a cross-functional and centralized body of representatives and is responsible for defining and maintaining policies, advising on AI ethics questions, and providing guidance through guardrails and education courses.

The Policy Advisory Committee, composed of senior leaders, exists at the top. It oversees the AI Ethics Board, reviews the impacts of various issues throughout the business, and acts as the final escalation point for concerns as needed. The Chief Privacy Officer (CPO) Ethics Project Office supports each group. It acts as the formal liaison between each level, manages workstreams defined by the AI Ethics Board, and supports the use-case review process.

The scale of IBM's governance structure reflects its scale as a business. For many businesses, the responsibilities performed at each level could likely be condensed into fewer levels and roles as needed, while some may not be necessary at all. Ultimately, leadership should establish the purpose and role of their AI Governance framework to determine which roles and responsibilities are essential to their business.

Business Benefits

Incentives and Responsibility are Aligned

The World Economic Forum's 2024 "Responsible AI Playbook for Investors" highlights natural tensions between business incentives and the aims of responsible AI. For example, whereas a business may want to bring a product to market rapidly, implementing AI risk mitigation policies and procedures may slow product development using AI. However, business incentives may align more with responsible AI than many businesses realize.29

As a starting point, AI Governance addresses the most prevalent barriers to AI adoption. Policies and procedures help businesses mitigate AI risks better, manage data along the AI lifecycle, and integrate new technical and logistical systems during AI adoption. As outlined above, many CEO and data practitioner AI Governance responsibilities directly address such problems.29

Implementing AI Governance further benefits businesses during the operation phase of the AI lifecycle. A paper from IBM and the University of Notre Dame proposes a Holistic Return on Ethics (HROE) framework for understanding the breadth of ROI that AI ethics affords a business. These include direct economic to intangible (reputational) and real option (capability) improvements. We use these types of benefits to roughly categorize the benefits identified across sources.

Direct economic benefits largely come from AI Governance, which optimizes how efficiently a business uses AI systems. For example, AI Governance platforms are designed to succinctly bring stakeholders together along the AI lifecycle to employ best practices that maximize AI's benefits and prevent risks.4

Meanwhile, AI Governance's risk mitigation capabilities strongly contribute to producing intangible benefits for a business.30 We previously explored the impact and prevalence of harms from AI on society, such as biased facial recognition systems, and on businesses, such as data breaches. But these are only the first-order impacts. In addition to avoiding the potential direct harm done by AI, mitigating AI risks can also build trust between a business and various target groups and ensure regulatory compliance.

Former Eli Lilly CDAO Vipin Gopal frames the alternative to adopting AI Governance as choosing to power innovation using an AI system that is more prone to producing biased and unfair outcomes, having its data breached, or becoming unexplainable.31

Increased ROI

Although many businesses may be concerned about AI Governance having an overall negative impact on their costs and efficiency, evidence shows that AI Governance can increase ROI. As researchers from IBM and the University of Notre Dame argue, this ROI comes in various forms, specifically economic, intangible (reputational), and real options (capabilities).30

Direct economic ROI is among the key factors businesses will consider AI Governance's effect on. Gartner's March 2023 survey – conducted at the start of gen AI's proliferation – found various positive financial-related impacts attributed to a business's AI Governance framework. Forty-eight percent experienced more successful AI initiatives, 46% improved customer experience, 46% increased revenue, 30% decreased costs, and more.32

Gartner further asked respondents whose organizations lacked AI Governance about the negative impacts of AI that they attribute to lacking AI Governance. Forty-five percent of respondents could specify at least one negative effect, with the top three impacts being increased costs (47%), failed AI initiatives (36%), and decreased revenue (34%).32

MIT Sloan and BCG's 2022 research provides more granular insight into the direct ROI of AI Governance prior to gen AI. Specifically, they investigated the business benefits from responsible AI programs between responsible AI leaders and non-leaders. A business's responsible AI leadership level was determined by analyzing survey data about the breadth of their programs, implementation of best practices, and breadth of "considerations" they perceive as being a part of responsible AI.

The report found that responsible AI leaders are far morelikely to attain various business benefits relative to non-leaders. Leaders experienced higher rates of better products and services (50% vs. 19%), enhanced brand differentiation (48% vs. 14%), and accelerated innovation (43% vs. 17%) from their responsble AI programs relative to non-leaders.

An additional finding was that a business's AI maturity level also moderates the likelihood of attaining benefits from responsible AI programs. Table 3.1 shows how business leadership levels in responsible AI and AI maturity interact. Although leadership seems to more substantially increase the chance of benefits from a responsible AI program than AI maturity does, businesses that were both responsible AI leaders and had high AI maturity were the most likely to gain benefits.

| Table 3.1 | ||

|---|---|---|

| High commitment (Leader) | Low commitment (Non-leader) | |

| High program maturity | 49% | 23% |

| Low program maturity | 30% | 11% |

It is nonetheless notable that MIT Sloan and BCG found that most businesses with any level of responsible AI leadership and AI maturity had not yet experienced AI benefits to products and services, brand differentiation, or innovation. Increasing AI maturity levels, plus the opportunities afforded by gen AI not available at the time of this survey, plausibly mean that the majority of at least responsible AI leaders with high AI maturity are seeing business benefits from their responsible AI program. Finally, that no comparison was made on the ROI between businesses using AI with or without a responsible AI program makes understanding the relative ROI of implementing AI Governance less clear.

Intangible ROI could also be high from AI Governance. IBM and University of Notre Dame researchers define intangible impacts as the indirect paths to return associated with organizational reputation. From the corporate social responsibility (CSR) perspective, AI Governance produces considerable value for society, primarily by mitigating risks. The authors argue that strong CSR and environmental, social, and governance (ESG) ratings for a business "improve sales and reputation and can decrease the likelihood of customer churn." Such benefits can be estimated using the Social Return on Investment (SROI) technique.30

The researchers also emphasize the potential value of real options for businesses from AI Governance. The authors define real options as "small investments made by organizations that generate future flexibility – they position managers to make choices that capitalize on future opportunities." Resources can be invested into actions in phases throughout a project as a business learns more about which actions might be especially promising for it to take. This approach is especially beneficial when investment opportunities are highly uncertain.

For example, real options reasoning helps businesses assess and justify investments in a novel technology that builds business knowledge, skills, and technical infrastructure. In the case of AI ethics, the "capabilities an organization gains from an investment in ethical product development will further result in opportunities for possible product improvements, the mitigation of identified product issues, and possibly a greater awareness and thus improvement in the organization's employee and customer culture."

Both intangible ROI and real options are strongly connected to building trust and regulatory compliance.

Building Trust

KPMG's 2023 "Trust in AI" study argues that there are four distinct pathways to trust. These are an institutional pathway reflecting beliefs such as the adequacy of current safeguards, a motivational pathway reflecting perceived benefits of AI, an uncertainty reduction pathway regarding the mitigation of AI risks, and a knowledge pathway reflecting people's ability to understand and use AI. The first and third each highlight just how important a business's risk mitigation capabilities are for building trust in others.33

This perception of a business's commitment and ability to mitigate AI risks strongly dictates how consumers, employees, and other groups engage that business. Strong signals of a business's commitment and abilities are necessary to reliably maintain favorable perception.

To highlight just how important AI Governance is perceived as being to trust in AI, a 2024 survey from IBM indicates that three-quarters of CEOs believe that trusted AI is impossible without AI Governance.34 Many businesses might thus announce commitments to AI Governance without investing in its implementation. TechBetter's research emphasizes that failing to follow through with commitments to AI Governance amounts to "ethics washing" which misleads and potentially disillusions others about a business's motivations and reliability.35 By having untrustworthy or no AI Governance, businesses risk losing support.

Sentiments towards Data Privacy, for example, are strong, with IBM finding that 57% of consumers are uncomfortable with how their data is being handled. In comparison, 37% have switched to more privacy-protective brands.43 Consumers would also likely act based on their trust in how businesses use AI. In a separate IBM survey of IT professionals, 85% of respondents strongly or somewhat agreed that consumers are more likely to choose services from companies with transparent and ethical AI practices.36

The trust gap between customers and businesses adopting AI seems large and possibly growing. In a 2023 survey of over 14,000 international customers, research from Salesforce indicates that roughly three-quarters of customers were concerned about unethically using AI. Sixty-eight percent think that AI advances make it more important for businesses to be trustworthy. These concerns, likely amplified by the introduction of gen AI, might explain why consumer and business buyer openness to businesses using AI dropped between 2022 and 2023 from 82% to 73% and 65% to 51%, respectively.37

Although not specific to businesses, results from KPMG's "Trust in AI" survey of over 17,000 people internationally also indicate a large trust gap around AI in general. They found that 61% of respondents felt unsure or disagreed that current AI safeguards are sufficient to protect from AI risks. These confidence levels across the Western countries surveyed have remained relatively stagnant between 2020-2022, which is proposed to indicate that AI Governance adoption is lagging behind people's expectations. However, levels of trust varied considerably by geography. Most people in India (80%) and China (74%) believe current safeguards to be adequate.33

Consumer trust erodes further when AI incidents occur. BCG points out that it's not only significant AI incidents, such as a data breach, that will lose a business consumer's trust but also individualized incidents. They give examples of someone being denied a bank loan due to a biased assessment of their request or a recommender algorithm suggesting Father's Day gift ideas to someone whose father recently died. "Today's consumers will hesitate to buy from a company that doesn't seem in control of its technology, or that doesn't protect values like fairness and decency."38

Trust in businesses by their employees is also improved by implementing AI Governance. IAPP and EY found that among Data Privacy practitioners, 65% of respondents at businesses without AI Governance don't feel confident about their privacy compliance.48 Only 12% of respondents at businesses with AI Governance implemented don't feel confident. Low confidence in their business's risk mitigation capabilities could drive away employees. Sixty-nine percent of workers in an IBM survey would be more willing to accept job offers from socially responsible organizations, while 45% are willing to take a pay cut to work at one.25

Boards and investors also need to trust AI risk mitigation practices. BCG suggests that AI incidents can harm a CEO's credibility with their board. At the same time, investors need to be confident that a business's use of AI is aligned with its corporate social responsibility statements.38 At the most significant scale, KPMG thinks that the strength of trust's impact on people's decisions could be critical to the sustained adoption of AI by businesses as a whole.33

Regulatory Compliance

Compliance with existing and preparation for future AI regulations increasingly make AI Governance systems obligatory. Businesses across all industries, sizes, and countries are increasingly asked to explain how they use AI. Gartner advises that businesses "be prepared to comply beyond what's already required for regulations such as those pertaining to privacy protection." Meanwhile, the WEF argues that doing so "helps proactively mitigate regulatory and business risks, saves costs, and ultimately influences the regulatory landscape."

Noncompliance risks substantial financial costs and legal action. For example, the most severe penalties of the EU AI Act can amount to €35 million or 7% of total worldwide annual turnover if the offender is a company. Costly compliance overhead, such as from audits, is also a risk to businesses unprepared for emerging regulations.

While the need for regulatory compliance will be clear for many businesses, what regulations will involve is not. Among the key barriers to AI adoptionpreviously identified was uncertainty about regulations. Across the surveys explored, approximately 20% and 60% of businesses struggle to adopt or scale AI due to uncertainty about what regulations will involve.

Looking at current regulations is a start to understanding future regulations. Among the best sources of data on the current global regulatory landscape is the OECD Policy Observatory's database on national AI strategies and policies. It's a live repository of over 1,000 AI-related policies across 69 countries, territories, and the EU. In the emerging AI-related regulation section, we can see how many related policies different countries currently have, what groups they target, and the challenges they address.

Of the 240 relevant policies cataloged, Saudi Arabia (21) has the most policies currently in place, followed by the UK (20) and then the US (18). Firms of any size are the third most commonly targeted group, with a total of 112 related policies. Risks to human safety (81), risks to fairness (66), and data protection and right to privacy (61) are the most commonly targeted challenges. These statistics indicate that many emerging AI-related regulations strongly prioritize risk mitigation among businesses, supported by observations from the IDC that concerns about AI risks are driving an increasing amount of AI regulation.

Businesses with higher levels of risk management and responsible AI seem to be more prepared for emerging regulations. First, IAPP and EY's Autumn 2023 survey found that respondents whose organizations lack AI Governance were far less confident about privacy compliance capabilities than those with AI Governance (65% versus 12%). This result aligns with BCG's 2022 survey, in which businesses with more mature responsible AI programs report feeling ready to meet emerging AI regulation requirements (51%) relative to businesses with new responsible AI programs (30%).

Results from IBM's 2023 survey tell a similar story. Fewer than 60% of executives feel prepared for gen AI regulation, while 69% expect a regulatory fine. This feeling of being unprepared is significantly holding businesses back. Fifty-six percent are delaying major investments pending clarity on AI standards and regulations, and 72% will forgo gen AI benefits due to ethical concerns. A lack of AI ethics is what they propose might explain these feelings. IBM estimates the proportion of AI budgets going to AI ethics will triple from 2018 levels of 3% to 9% in 2025.

Adoption Rates and Barriers

Rates of Business Adoption

It's difficult to make confident observations about adoption trends over time due to high variance in the respondent audiences and AI Governance practices relevant surveys focus on. We make broad observations about trends in AI Governance maturity with more specific insight into recent rates of risk mitigation implementations.

The maturity of AI Governance programs and frameworks appears to have made limited progress over 2022-2024. Four surveys provide moderately comparable data for understanding 2022-2023 adoption trends.

First, MIT Sloan and BCG's September 2022 study of 1000 managers found that 52% of businesses have implemented an AI Governance program.25 In March 2023, Gartner found that 46% of 200 data analytics and IT professionals had either produced a dedicated AI Governance framework or had finalized extending existing Data Governance policies.34 Third, KPMG's survey in August 2023 found that 46% of over 200 US business leaders have a mature, responsible AI Governance program." 48 A fourth study from November 2023 by IDC found that of 600 global respondents, 45% had "rules, policies, and processes to enforce responsible AI principles."49

Despite the heterogeneity of surveys, the relative closeness in adoption rates across these four surveys makes it seem plausible that global AI Governance adoption rates have fallen between 45-50% between 2022-2023. Results for the rate of businesses actively developing or implementing AI Governance measures are less well-supported, but two studies put it around 40%.32, 48

Data for later adoption trends over 2024 is limited. IAPP-EY's December 2023 survey of over 600 privacy professionals had 29% report having implemented AI Governance, while a July 2024 survey indicates that only around 30% of over 100 respondents had or were planning "to soon have the tools and skills for effective deployment, monitoring, and management of AI models." 24, 42 It's unclear what these figures tell us about adoption trends after November 2023; there are only two data points and the July 2024 survey doesn't distinguish between rates of AI Governance having been adopted, in-progress adoption, or intentions to adopt. However, one possibility that these results support is that AI Governance adoption rates have declined since late 2023.

The clearest insight about adoption rates comes from more granular evidence for rates of risk mitigation implementations. Data Stanford and Accenture's comprehensive survey from April 2024 reveals the proportion of businesses that have fully, partially, or not at all implemented a series of best practices across five risk categories: reliability, cybersecurity, fairness, transparency and explainability, and data governance.28

Businesses report largely similar rates of implementation of practices across all risks. For example, the average number of fully implemented reliability risk mitigations ranges from 36-39% across all risks, while only 5-12% of businesses haven't implemented any best practices. The most substantial difference in adoption rates across all risks is that a larger proportion of businesses have implemented over 50% of cybersecurity (28%) and fairness (25%) practices examined compared with only 8-16% of businesses having done so across the three other risks.28

These results overall suggest that roughly 80% of businesses using AI had been actively working towards AI Governance implementation or have an AI Governance program or framework already in place over 2022-2023. Trends for adoption since late 2023, however, remain less clear with the exception of risk mitigation practices. As of mid-2024, businesses report having implemented around one-third of risk mitigation practices on average across reliability, cybersecurity, fairness, transparency and explainability, and data governance risks. However, the heterogeneity of survey data makes understanding AI Governance adoption rates over time difficult to understand.

Deployment and Operation Barriers

Three key barriers identified include a lack of expertise, leadership understanding (of AI) and communication, and regulatory uncertainty across three surveys conducted between late 2022 and mid-2023. It's important to note that both ChatGPT's original release and the release of GPT-4 – two key events in the proliferation of gen AI – happened around the time these surveys were conducted.50, 51 Uncertainty about how gen AI work and increasing demand for AI adoption were likely especially high during this period. The key barriers experienced by businesses at that time may have been meaningfully address for many since then.

A lack of expertise was both the most widely highlighted risk across surveys examined and also the most frequently experienced barrier across all barriers identified. In Gartner's survey, 57% of respondents report facing a pronounced skills gap.34 MIT Sloan and BCG find that 54% of managers see a shortage in responsible AI expertise while 53% note inadequate training among staff.33 Finally, IAPP and EY found that 31% of respondents indicate a shortage of qualified AI governance professionals, while 33% report insufficient training or certification opportunities.42

Leadership challenges and regulatory uncertainty are evident, too. Gartner found that 48% have high uncertainty about AI's business impact, and 37% reflect a production-first mentality.34 Similarly, 43% of organizations in MIT Sloan and BCG's survey experience low prioritization and funding for responsible AI initiatives, with 42% reporting insufficient awareness among senior leaders.33 From IAPP and EY, 56% of respondents believed a lack of understanding AI's risks and benefits to be a primary challenge with implementing AI Governance.42

Regulatory uncertainty also remains a critical challenge. Across surveys, 42% of privacy professionals IAPP and EY surveyed cite the rapid evolution of the regulatory landscape as a key challenge to implementing AI Governance, while 16% of data analytics and IT respondents in Gartner's survey feel there is inadequate legal pressure to enforce governance measures.34, 42

Planning and Resource Barriers

Among deployment and operation barriers, technical and organizational appear to be the most substantial barriers. As many as 57% of privacy professionals IAPP and EY surveyed think a lack of control over AI deployment is a major barrier, while 45% feel challenged by the rapid pace of technological change, and 39% find a lack of standardization difficult.42 Data analytics and IT professionals meanwhile report in Gartner's survey that they're especially struggling with fragmented technologies (25%) and poor cross-functional collaboration (22%).34 There's limited direct overlap in the specific barriers highlighted by respondents across surveys, which may indicate that the breadth of logistical challenges is especially large.

Meanwhile, very few risk mitigation or Data Management barriers are reported. Gartner's data analytics and IT professionals cited a lack of Data Governance (26%) and poor Data Management (18%) as prominent barriers. On the same survey, 20% of respondents highlighted insufficient transparency from third-party AI solutions as a barrier. This barrier relates strongly to logistical barriers also.34

Recent evidence for rates of AI Governance adoption among businesses indicates that progress broadly plateaued over 2022-2023. Roughly half of businesses have some sort of framework or program in place already while around 40% seem to have been actively developing and implementing AI Governance as of 2023.4, 25, 34, 42 There's weak evidence to suggest that adoption rates may have then declined closer to 30% of businesses having AI Governance in place from the end of 2023-2024.24, 42

The most granular data available on risk mitigation indicates that although there's still considerable progress left for businesses to go on implementing best practices, the vast majority of large businesses globally have implemented at least one best practice across different AI risk categories.28 More confident insights into recent rates of adoption are limited by the heterogeneity and limited number of surveys and other evidence.

While the scale of each barrier might be different, the key barriers to AI Governance adoption can be grouped under similar categories to those for adopting AI itself. However, unlike with AI adoption, businesses seem to especially struggle more with planning and resources before deployment, such as with a lack of expertise. This lack of expertise could be behind downstream deployment issues.33, 34, 42

Even among businesses with a framework, the cumulative effect of these barriers together has left many CEOs with low confidence about being able to effectively deploy AI Governance. One survey from IBM showed that of over 2,500 CEOs globally at the beginning of 2024, only 39% whose businesses have a framework believed their organization's AI Governance was effective.36 Evidence, therefore, indicates that not only is AI Governance adoption currently low and possibly static across businesses using AI but also that many existing AI Governance frameworks and programs might be failing to meet business needs.

Discussion

Recent evidence for rates of AI Governance adoption among businesses indicates that progress broadly plateaued over 2022-2023. Roughly half of businesses have some sort of framework or program in place already while around 40% seem to have been actively developing and implementing AI Governance as of 2023.4, 25, 34, 42 There's weak evidence to suggest that adoption rates may have then declined closer to 30% of businesses having AI Governance in place from the end of 2023-2024.24, 42

The most granular data available on risk mitigation indicates that although there's still considerable progress left for businesses to go on implementing best practices, the vast majority of large businesses globally have implemented at least one best practice across different AI risk categories.28 More confident insights into recent rates of adoption are limited by the heterogeneity and limited number of surveys and other evidence.

While the scale of each barrier might be different, the key barriers to AI Governance adoption can be grouped under similar categories to those for adopting AI itself. However, unlike with AI adoption, businesses seem to especially struggle more with planning and resources before deployment, such as with a lack of expertise. This lack of expertise could be behind downstream deployment issues.33, 34, 42

Even among businesses with a framework, the cumulative effect of these barriers together has left many CEOs with low confidence about being able to effectively deploy AI Governance. One survey from IBM showed that of over 2,500 CEOs globally at the beginning of 2024, only 39% whose businesses have a framework believed their organization's AI Governance was effective.36 Evidence, therefore, indicates that not only is AI Governance adoption currently low and possibly static across businesses using AI but also that many existing AI Governance frameworks and programs might be failing to meet business needs.

Conclusion

AI Governance is rapidly evolving as businesses, regulators, and industry leaders work to establish effective frameworks that balance innovation with risk mitigation. While the adoption of AI Governance frameworks has progressed, evidence suggests that implementation remains inconsistent, with many organizations struggling to establish effective oversight and operational mechanisms.

The challenges businesses face – ranging from expertise shortages to regulatory uncertainty – underscore the need for a proactive approach to AI Governance. Companies that invest in comprehensive, well-structured AI Governance frameworks not only mitigate risks but also unlock significant business benefits, including improved operational efficiency, enhanced trust, and stronger regulatory positioning.

As AI continues to advance, organizations must move beyond viewing AI Governance as a compliance necessity and recognize its strategic value. By integrating AI Governance into broader corporate governance structures, businesses can position themselves to harness AI’s full potential while maintaining ethical integrity, regulatory alignment, and stakeholder confidence.

For businesses at any stage of AI adoption, the next step is clear: prioritize AI Governance as a fundamental business function, ensuring that policies, risk management strategies, and oversight structures evolve in tandem with technological advancements.

Endnotes

Mac Jordan

Data Strategy Professionals Research Specialist