Mac supports Data Strategy Professionals with newsletter writing, course development, and research into Data Management trends.

AI Governance Frameworks

Value potential of seven leading frameworks drawn from public and private sector institutions. AI Governance Frameworks Comparison post 5 of 6.

Author: Mac Jordan | Post Date: Mar 14, 2025 | Last Update: Sep 2, 2025 | Related Posts

Navigating the complex landscape of AI Governance frameworks has become essential for businesses seeking to deploy AI responsibly. As organizations increasingly integrate AI into their operations, the need for structured guidance on managing AI risks and ensuring responsible development has never been more pressing. Yet the proliferation of AI Governance frameworks from various sectors presents a challenge: which frameworks provide the most value for specific organizational needs?

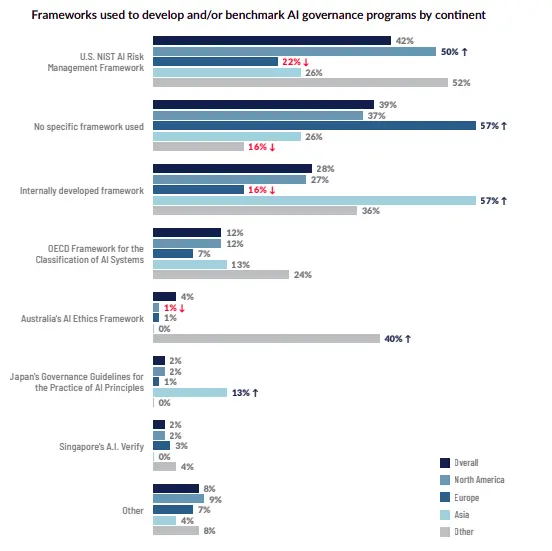

The AI Governance discipline presents frameworks from diverse sources – public sector institutions, technology vendors, and industry consortia – each with different strengths and focuses. According to a 2023 IAPP and EY survey, while NIST's AI Risk Management Framework is utilized by 50% of North American businesses, many organizations in Asia and Europe either develop internal frameworks (57% in Asia) or haven't adopted any formal framework at all (57% in Europe).6 This variation underscores both the evolving nature of AI Governance and the need for businesses to make informed choices about which frameworks best align with their specific requirements.

This post provides a comprehensive assessment of seven leading AI Governance frameworks, analyzing their relative strengths in risk mitigation and Data Management. Research indicates that effective risk mitigation and Data Management are among the highest priorities for AI Governance. By evaluating the breadth, depth, and practicality of each framework's guidance, businesses can better identify which frameworks might be of highest value for them given their particular needs.

Contents

- Overview

- Framework Selection Methodology

- Principle-based Frameworks

- Singapore's Model AI Governance Framework

- OECD Framework for the Classification of AI Systems

- NIST AI Risk Management Framework

- Alan Turing Institute's HUDERAF for AI Systems

- Vendor-based Frameworks

- Microsoft's Responsible AI Standards

- AWS Cloud Adoption Framework for AI, ML, and Gen AI

- Google's Secure AI Framework Approach

- Key Takeaways

- Discussion

- Conclusion

Overview

AI Governance is defined in this post as the set of policies and mechanisms that help ensure AI systems remain ethical and safe throughout the AI lifecycle. To establish the AI Governance expertise needed for effective AI system deployments, following AI Governance framework can be very helpful.

Several prominent public and private sector institutions have produced potentially useful – if often complicated and densely written – AI Governance frameworks. Given that the field lacks agreed-upon standards, these frameworks vary in terms of their content and format.

Profiles for each framework outline key insights about a framework's basic details and potential value to businesses. The goal of these framework profiles is to help data practitioners and C-level executives broadly understand what each framework offers them.

First, the profiles provide clear, concise descriptions of each framework, including its focus, aims, main ideas, and specific examples of guidance it offers. Second, the profiles include basic assessments of a framework's breadth, depth, and practicality across risk mitigation and Data Management practices. These assessments partly take supplementary resources for each framework into account.

Framework Selection Methodology

We assessed seven leading frameworks: four public sector frameworks and three vendor-based frameworks. We selected the four public sector frameworks from IAPP-FTI Consulting's 2024 report.1 We investigated AI Governance frameworks from the three leading cloud service vendors: Microsoft, AWS, and Google. The private sector frameworks offer insight into recommended best practices to navigate the novel challenges of AI Governance.

Principle-based Frameworks

OECD Framework for the Classification of AI Systems

Introduction

The Organisation for Economic Co-operation and Development's (OECD) AI in Work, Innovation, Productivity, and Skills (AI-WIPS) program created the OECD Framework for the Classification of AI systems with the support of over 60 experts in their Experts Working Group. The framework was released in February 2022.8

This framework offers high-level guidance about which dimensions AI will most impact, which risks threaten each dimension, and how risks can start to be approached responsibly, as outlined in the content summary below. Definitions, concepts, and criteria are designed to be adaptable to future classifications of AI. Although the framework is primarily targeted at policymakers, regulators, and legislators, businesses can use the framework to understand what systems need to be governed, what risks AI presents, and how businesses might start mitigating these risks.

The Experts Working Group's next steps produce an actionable AI system risk methodology, including refined classification criteria based on actual AI systems, metrics to assess subjective AI harm-related criteria, and a common AI incident reporting framework.

Stated Aims

- Promote a common understanding of AI

- Inform registries or inventories

- Support sector-specific frameworks

- Support risk assessment

- Support risk management

Content Summary

The "OECD Framework for the Classification of AI systems" is a comprehensive framework that helps assess AI systems. Specifically, the framework links AI system characteristics with the OECD's AI Principles and outlines case studies for how they might be implemented for each dimension.9

The framework primarily consists of two sections. The first explains the dimensions and characteristics used for assessment, while the second provides several case studies on how assessments work in practice.

The framework classifies AI systems using five dimensions: People & Planet, Economic Context, Data & Input, AI Model, and Task & Output. Core characteristics are identified and explained for each dimension, with notes about why each characteristic matters and how it relates to the OECD's AI Principles. Particular attention is paid to the policy implications of each characteristic, though many are also relevant to how businesses deploy and operate AI.

These characteristics are then used to classify the application of AI systems in specific, real-world contexts. Various organizations were invited to test the framework's usability and robustness through an online survey and public consultations. Key conclusions from the survey include that the framework is best suited to specific AI system applications over generic AI systems and that respondents were more consistent at classifying People & Planet and Economic Context criteria than other dimensions.

Best Practice Highlights

| Table 4.1 – Best Practices (OECD Framework for the Classification of AI Systems) | ||

| Risk Management | ||

| Identifiability of personal data (Privacy-enhanced, p. 38-39) Personal data taxonomies differentiate between different categories of personal data. ISO/IEC 19441 (2017) distinguishes five categories, or "states," of data identifiability:

| ||

| Model transparency and explainability (Accountability and Transparency, Explainable and Interpretable, p. 49) Different AI models can exhibit different degrees of transparency and explainability. Among other things, this entails determining whether meaningful and easy-to-understand information is made available to:

| ||

| Data Management | ||

| Data Quality and appropriateness (Data Quality, p. 39) Data appropriateness (or qualification) involves defining criteria to ensure that the data are appropriate for use in a project, fit for purpose, and relevant to the system or process following standard practice in the industry sector. For example, in clinical trials to evaluate drug efficiency, criteria for using patient data must include patients' clinical history – previous treatments, surgery, etc. Data quality also plays a key role in AI systems, as do the standards and procedures to manage data quality and appropriateness. An AI application or system applies standard criteria or industry-defined criteria to assess:

| ||

| Provenance of data and input (Metadata, Data Quality, p. 36) The following list draws on the data provenance categorization made by Abrams (Abrams, 2014[16]) and the OECD (OECD, 2019[15]) of data collected with decreasing levels of awareness. It should be noted that these categories can overlap, and most systems will combine data from different sources. Here, we broaden the original categorization that focused on personal data to also cover expert input and non-personal data, as well as synthetically generated data.

| ||

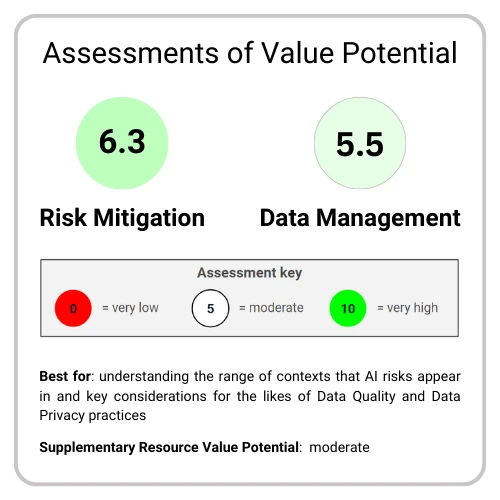

Assessment Results

| Table 4.2 – Risk Mitigation (OECD Framework for the Classification of AI Systems) | ||

| Rating | Criteria | Reasoning |

| 10 | Breadth |

|

| 6 | Depth | A fair amount of detail is given to mitigating risks associated with data, such as bias, data privacy, and transparency. Other risks, such as to well-being and society as covered under People & Planet have far less detail. |

| 3 | Practicality | Even among the most detailed practices outlined, relatively little of this would be immediately usable to mitigate risks. The content is more descriptive than procedural. |

| Average score: 6.3 | ||

| Table 4.3 – Data Management (OECD Framework for the Classification of AI Systems) | ||

| Rating | Criteria | Reasoning |

| 5.5 | Breadth |

|

| 6 | Depth | For each characteristic under Data & Input, many elements and key terms are identified with details. However, the amount of detail varies widely. |

| 5 | Practicality | Responsibilities related to data collection and checking Data Quality are practical, but most other practices are more descriptive than procedural. |

| Average score: 5.5 | ||

Use Cases

OneTrust added a checklist template based on the OECD Framework for the Classification of AI Systems to their AI Governance solution.

Supplementary Resources

- AI, data governance and privacy: primarily useful for Data Privacy and privacy risks considerations10

- Tools for Trustworthy AI: broadly useful for AI Governance implementation, especially towards trustworthy AI7

- Using AI in the Workplace: identifies the risks of using AI in the workplace and how they might be addressed11

NIST AI Risk Management Framework

Introduction

The US National Institute of Standards and Technology (NIST) produced the AI Risk Management Framework (AI RMF) in collaboration with the public and private sector, releasing the framework in January 2023.3

The framework defines the types of risks that AI presents and proposes four functions that enable dialogue, understanding, and activities to manage AI risks and responsibly develop trustworthy AI systems. NIST deliberately designed it to be non-sector-specific and use-case agnostic so that all organizations and individuals – referred to as "AI actors" – who play an active role in the AI system lifecycle can benefit from its guidance.

NIST will continue to update, expand, and improve the framework based on evolving technology, the global standards landscape, and AI community experience and feedback. For example, evaluations for the AI RMF's effectiveness in conjunction with the AI community, such as on ways to better measure bottom-line improvements in the trustworthiness of AI systems, will be a priority. NIST plans to conduct a formal review no later than 2028.

Stated Aims

- Equip organizations and individuals with approaches that increase the trustworthiness of AI systems

- Help foster the responsible design, development, deployment, and use of AI systems over time

- Be voluntary, rights-preserving, non-sector-specific, and use-case agnostic

- Be operationalized by organizations in varying degrees and capacities

- Address new risks as they emerge

Content Summary

The "NIST AI Risk Management Framework (AI RMF)" consists of two parts. Together, they offer a comprehensive and action-oriented introduction to mitigating AI risks.

Part 1 provides foundational information about AI risks, trustworthiness characteristics, and framework context. The framework outlines trustworthiness properties, including AI that is valid and reliable, safe, secure and resilient, accountable and transparent, explainable and interpretable, privacy-enhanced, and fair with harmful bias managed.

Part 2 details the core functions and profiles for implementing risk management practices. Four core functions form the basis of AI risk management activities:

- GOVERN: cultivates and implements risk management culture and policies

- MAP: establishes context and frames AI system risks

- MEASURE: analyzes, assesses, and tracks AI risks using quantitative and qualitative methods

- MANAGE: allocates resources to prioritize and address identified risks

The accompanying AI RMF Playbook is an especially valuable supplementary resource. It provides substantially more detail on each action and outcome outlined in the AI RMF, making it critical for effective practical implementation.

Best Practice Highlights

| Table 4.4 – Best Practices (NIST AI Risk Management Framework) | ||

| Risk Mitigation | ||

| GOVERN 6 (all risks, p. 24) Policies and procedures are in place to address AI risks and benefits arising from third-party software and data and other supply chain issues.

| ||

| MAP 5 (all risks, p. 27-28) Impacts on individuals, groups, communities, organizations, and society are characterized.

| ||

| MEASURE 3 (all risks, p. 30-31) Mechanisms for tracking identified AI risks over time are in place.

| ||

| MANAGE 1 (all risks, p. 32) AI risks based on assessments and other analytical output from the MAP and MEASURE functions are prioritized, responded to, and managed.

| ||

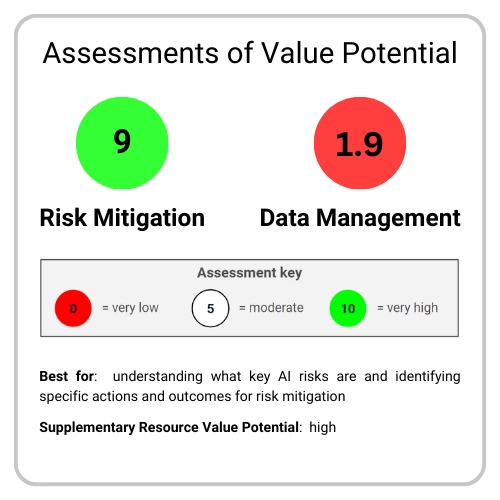

Assessment Results

| Table 4.5 – Risk Management (NIST AI Risk Management Framework) | ||

| Rating | Criteria | Reasoning |

| 10 | Breadth |

|

| 9 | Depth | Includes an introduction to the scope of each risk with key terms, roles, and responsibilities. Actions and outcomes for four core functions are outlined in sequence. |

| 8 | Practicality | A thorough range of actions and outcomes in a clear, logical sequence is provided for each function, but their lack of detail limits their direct implementability. The AI RMF Playbook is an especially useful supplementary resource for this purpose.12 |

| Average score: 9 | ||

| Table 4.6 – Data Management (NIST AI Risk Management Framework) | ||

| Rating | Criteria | Reasoning |

| 1.8 | Breadth |

|

| 2 | Depth | There is minimal explicit focus on Data Management practices, although Data Quality and Data Security practices are implied in relation to specific risks. |

| 2 | Practicality | Most of the relevant guidance offered is in the overview for risks rather than as a practice in the four core functions. |

| Average score: 1.9 | ||

Use Cases

Various US-based organizations have adopted the NIST AI RMF. For example,Workday says it is using the AI RMF to assess, refine, and strengthen its approach to trustworthy AI.

Supplementary Resources

- NIST AI Risk Management Framework Playbook: in-depth, highly practical guidance on the actions and outcomes of each core function12

- Gen AI Intelligence profile: overview of the risks unique to or exacerbated by gen AI13

- NIST Special Publication 1270: Towards a Standard for Identifying and Managing Bias in Artificial Intelligence: in-depth exploration of the context and practical considerations for AI bias2

- NIST Cybersecurity Framework: guidance with a lot of relevance to AI security and resilience risks14

Singapore's Model AI Governance Framework

Introduction

Singapore's Info-communications Media Development Authority (IMDA) and Personal Data Protection Commission (PDPC) developed the Model AI Governance Framework in collaboration with industry stakeholders.4 IMDA and PDPC first released it at the 2019 World Economic Forum in Davos, with a second edition in January 2020.

The framework provides guidance for organizations deploying AI solutions at scale, translating ethical principles into concrete governance practices. Rather than prescribing rigid requirements, it offers flexible guidance that organizations can adapt to their needs, focusing on practical measures to address key ethical and governance issues in AI deployment. IMDA and PDPC deliberately designed it to be sector-agnostic, technology-neutral, and applicable to organizations of any size or business model.

Unlike frameworks focused primarily on high-level principles or policy guidance, Singapore's approach emphasizes operational implementation – helping organizations build stakeholder confidence through responsible AI deployment while aligning their internal structures with accountability-based practices in Data Management and protection.

The PDPC maintains this as a "living" framework, committing to periodic updates that reflect evolving AI technologies, ethical considerations, and governance challenges.

Stated Aims

- Designed to be a practical, ready-to-use tool that organizations can adapt based on their specific needs while maintaining core principles of responsible AI deployment

- Algorithm-agnostic, Technology-agnostic, Sector-agnostic, Scale- and Business-model-agnostic

- Guidance on the key issues to be considered and measures that can be implemented

Content Summary

The Singapore Model AI Governance Framework is especially focused on practical implementation of AI Governance.

It's organized around four key areas. Internal Governance Structures and Measures guides organizations in establishing oversight and accountability mechanisms for ethical AI deployment. The framework then covers Determining Human Involvement in AI-augmented Decision-making, introducing a risk-based methodology with three oversight models and a probability-severity matrix to guide implementation.

Operations Management outlines essential practices for responsible AI development, focusing on data quality, algorithm governance, and model management through explainability, repeatability, and robustness. Finally, Stakeholder Interaction and Communication provides guidance on AI system transparency and stakeholder engagement practices.

The framework emphasizes practical implementation throughout, supporting each area with real-world examples while maintaining flexibility for different organizational contexts.

Best Practice Highlights

| Table 4.7 – Best Practices (Singapore Model AI Governance Framework) | ||

| Risk Mitigation | ||

| Repeatability (valid and reliable, fair, explainable, and interpretable, p. 46) Where explainability cannot be practicably achieved given the state of technology, organizations can consider documenting the repeatability of results produced by the AI model. Repeatability refers to the ability to consistently perform an action or make a decision, given the same scenario. While repeatability (of results) is not equivalent to explainability (of algorithm), some degree of assurance of consistency in performance could provide AI users with a larger degree of confidence. Helpful practices include:

| ||

| Traceability (Accountability and Transparency, Explainable and Interpretable, p. 48-49) An AI model is considered to be traceable if (a) its decisions and (b) the datasets and processes that yield the AI model's decision – including those of data gathering, data labeling, and the algorithms used – are documented in an easily understandable way. The former refers to the traceability of AI-augmented decisions, while the latter refers to traceability in model training. Traceability facilitates transparency and explainability and is also helpful for other reasons. First, the information might also be useful for troubleshooting or investigating how the model was functioning or why a particular prediction was made. Second, the traceability record (in the form of an audit log) can be a source of input data that can be used as a training dataset in the future. Practices that organizations may consider to promote traceability include:

| ||

| Data Management | ||

| Ensuring Data Quality (Data Quality, p. 38) Organizations are encouraged to understand and address factors that may affect the quality of data, such as:

| ||

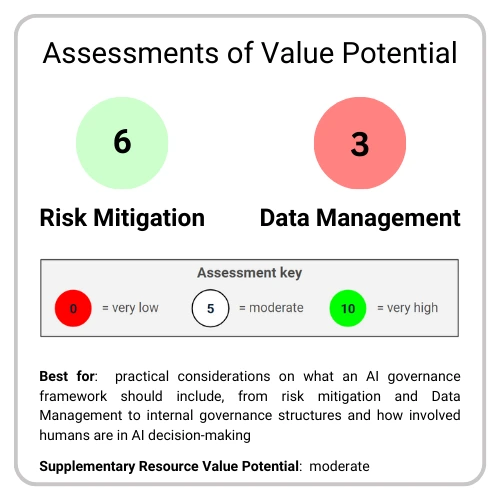

Assessment Results

| Table 4.8 – Risk Mitigation (Singapore Model AI Governance Framework) | ||

| Rating | Criteria | Reasoning |

| 7.1 | Breadth |

|

| 5 | Depth | Trustworthy AI features are introduced with moderate levels of detail on average but with high variance between them; explainability has more than twice as much coverage as robustness. |

| 6 | Practicality | Some specific actions or steps are included for most of the trustworthiness features covered. A brief real-world case study on Pymetrics managing bias and guidance on using the probability-severity of harm matrix are also offered. |

| Average score: 6 | ||

| Table 4.9 – Data Management (Singapore Model AI Governance Framework) | ||

| Rating | Criteria | Reasoning |

| 0.9 | Breadth |

|

| 5 | Depth | Moderate coverage of Data Quality considerations is offered, such as data lineage, keeping a data provenance record, and reviewing dataset quality. |

| 3 | Practicality | Guidance is largely given within the context of model development and is descriptive rather than procedural. However, the Data Quality considerations covered would likely apply to AI deployment and operation. |

| Average score: 3 | ||

Use Cases

Various organizations are using Volume 1 and Volume 2 of the framework.

- v1 example: "DBS put in place a rigorous process to ensure the responsible use of data in developing the AML Filter Model [which leverages AI to fight money laundering]. This aligned to the data accountability practices suggested in the Model AI Governance Framework, which focused on understanding the data lineage, minimising inherent bias and using different datasets for training, testing, and validation."

- v2 example: "To improve the quality of products and reduce the possibility of products being returned, IBM engaged AI Singapore to develop an AI solution to assist its Quality Engineers to make more accurate, consistent and faster labelling of the risk level that every product batch possesses."

Supplementary Resources

- Implementation and Self-Assessment Guide for Organisations: for implementing the Model AI Framework, including guiding questions and industry examples15

- Singapore AI Verify's Model AI Governance Framework for Generative AI: guidance on a combination of AI risks and other considerations for trustworthy gen AI5

- Sample report using the test kit: fictional case studies for how the test kit works in practice16

The Alan Turing Institute's HUDERAF for AI Systems

Introduction

The Alan Turing Institute (ATI) submitted the Human Rights, Democracy and the Rule of Law Assurance Framework (HUDERAF) for AI Systems to the Council of Europe (CoE) in September 2021, following the CoE's Ad Hoc Committee on Artificial Intelligence's December 2020 Feasibility Study.17

The framework deliberately remains 'algorithm-neutral' and practice-based to include different AI applications and adapt to future developments. The framework helps designers, developers, and users of AI systems minimize risks to human rights, democracy, or the rule of law.

The framework aims to deliver its features through a single digital platform with interactive templates and automated reporting tools. The HUDERAF model is designed to be dynamic, with regular reassessment and updates needed to address novel AI innovations, unforeseen applications, and emerging risks to fundamental rights and freedoms.

Stakeholders will achieve this process through inclusive, multi-party evaluations and revisions of the framework. The framework's unique contribution is its integration of human rights due diligence practices with technical AI Governance mechanisms, providing practical tools for organizations to meet their human rights obligations while developing trustworthy AI systems.

Stated Aims

- Provide an accessible and user-friendly set of mechanisms for facilitating compliance with a binding legal framework on AI

- Provide an innovation-enabling way to facilitate better, more responsible innovation practices that conform to human rights, democracy, and the rule of law so that these technologies can optimally promote individual, community, and planetary well-being

- To address the challenges put forward in CAHAI-PDG(2021)05rev, which involve various tools and methodologies to identify and evaluate AI risks to human rights, democracy, and the rule of law

Content Summary

ATI's HUDERAF consists of an integrated set of processes and templates that provide a comprehensive approach to assessing and managing AI risks to fundamental rights and freedoms. The framework is built around the SAFE-D goals that define key trustworthiness characteristics. These include safety, accountability, fairness, explainability, and data quality and protection.

The primary content of the framework is organized into four sections. Each section represents a stage in the process to identify and evaluate risks:

- PCRA (Preliminary Context-Based Risk Analysis): provides initial assessment of context-specific risks to human rights, democracy, and rule of law through structured questions and automated reporting

- SEP (Stakeholder Engagement Process): guides identification and meaningful inclusion of affected stakeholders throughout the project lifecycle using proportionate engagement methods

- HUDERIA (Human Rights Impact Assessment): enables collaborative evaluation of potential and actual impacts through stakeholder dialogue and detailed impact severity assessment

- HUDERAC (Assurance Case): builds structured arguments demonstrating how claims about achieving framework goals are supported by evidence

The framework is supported by detailed templates for each process that consolidate necessary questions, prompts, and actions. For example, the PCRA template features interactive elements that generate customized risk reports through conditional logic. Implementation is intended through a unified digital platform that makes all components accessible and user-friendly.

Best Practice Highlights

| Table 4.10 – Best Practices (Alan Turing Institute's HUDERAF for AI Systems) | ||

| Risk Mitigation | ||

| Accuracy and performance metrics (valid and reliable, p. 284) In machine learning, a model's accuracy is the proportion of examples for which it generates a correct output. This performance measure is sometimes characterized conversely as an error rate or the fraction of cases for which the model produces an incorrect output. As a performance metric, accuracy should be a central component to establishing and enhancing the approach to safe AI. Specifying a reasonable performance level for the system may also often require refining or exchanging the measure of accuracy. For instance, if certain errors are more significant or costly than others, a metric for total cost can be integrated into the model so that the cost of one class of errors can be weighed against that of another. | ||

| Transparency (accountable and transparent, explainable and interpretable, p. 289) The transparency of AI systems can refer to several features, including their inner workings and behaviors and the systems and processes that support them. An AI system is transparent when it is possible to determine how it was designed, developed, and deployed. This can include, among other things, a record of the data used to train the system or the parameters of the model that transform the input (e.g., an image) into an output (e.g., a description of the objects in the image). However, it can also refer to broader processes, such as whether there are legal barriers that prevent individuals from accessing information that may be necessary to understand fully how the system functions (e.g., intellectual property restrictions). | ||

| Source integrity and measurement accuracy (fair, p. 287) Effective bias mitigation begins at the commencement of data extraction and collection processes. Both the sources and instruments of measurement may introduce discriminatory factors into a dataset. When incorporated as inputs in the training data, biased prior human decisions and judgments – such as prejudiced scoring, ranking, interview data, or evaluation – will become the 'ground truth' of the model and replicate the bias in the outputs of the system in order to secure discriminatory non-harm, as well as ensuring that the data sample has optimal source integrity. This involves securing or confirming that the data-gathering processes involve suitable, reliable, and impartial sources of measurement and sound methods of collection. | ||

| Data Management | ||

| Responsible Data Management (Data Management generally, p. 288) Responsible Data Management ensures that the team has been trained on managing data responsibly and securely, identifying possible risks and threats to the system, and assigning roles and responsibilities for how to deal with these risks if they were to occur. Policies on data storage and public dissemination of results should be discussed within the team and with stakeholders and clearly documented. | ||

| Data Security (Data Security, p. 288) Each Party shall provide that the controller, and, where applicable, the processor, takes appropriate security measures against risks such as accidental or unauthorized access to, destruction, loss, use, modification, or disclosure of personal data. Each Party shall provide that the controller notifies, without delay, at least the competent supervisory authority within the meaning of Article 15 of this Convention of those data breaches which may seriously interfere with the rights and fundamental freedoms of data subjects. | ||

| Consent (or legitimate basis) for processing (Data Security, p. 288) Each Party shall provide that data processing can be carried out based on the free, specific, informed, and unambiguous consent of the data subject or some other legitimate basis laid down by law. The data subject must be informed of risks that could arise in the absence of appropriate safeguards. Such consent must represent the free expression of an intentional choice, given either by a statement – which can be written, including by electronic means, or oral – or by a clear affirmative action that clearly indicates in this specific context the acceptance of the proposed processing of personal data. Therefore, silence, inactivity, or pre-validated forms or boxes should not constitute consent. No undue influence or pressure – which can be of an economic or other nature – whether direct or indirect, may be exercised on the data subject, and consent should not be regarded as freely given where the data subject has no genuine or free choice or is unable to refuse or withdraw consent without prejudice. The data subject has the right to withdraw their consent at any time (which is to be distinguished from the separate right to object to processing). | ||

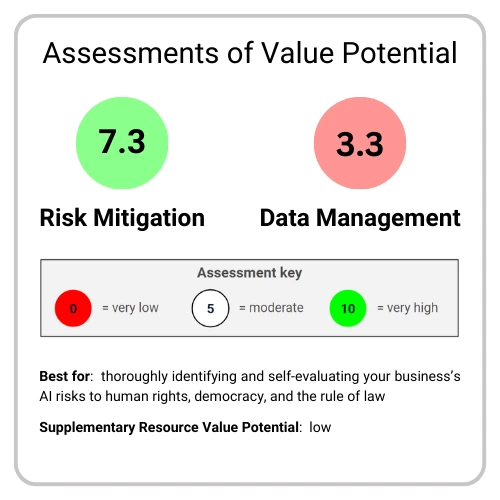

Assessment Results

| Table 4.11 – Risk Mitigation (Alan Turing Institute's HUDERAF for AI Systems) | ||

| Rating | Criteria | Reasoning |

| 10 | Breadth |

|

| 6 | Depth | Substantial depth is offered on what human rights, democracy, and rule of law specifically entail (e.g., p. 23-46) and how potential adverse impacts (risks) can be identified, assessed, and generally mitigated (e.g., p. 238-250). However, no guidance is given on how specific risks may be mitigated on the basis that 'specifying the goals [of an assurance case for mitigating risks] and operationalizing the relevant properties is highly contextual.' |

| 6 | Practicality | Extensive step-by-step instructions, corresponding resources, and illustrative examples are offered to identify, evaluate, and mitigate AI risks. Although this guidance thoroughly supports businesses in conducting their own risk assessments, the lack of detailed guidance on practices or risk mitigation significantly undermines the degree to which businesses can directly use this framework to mitigate risks in practice. |

| Average score: 7.3 | ||

| Table 4.12 – Data Management (Alan Turing Institute's HUDERAF for AI Systems) | ||

| Rating | Criteria | Reasoning |

| 2.9 | Breadth |

|

| 3 | Depth | Although Data Management practices are referred to or implied throughout the framework, most explicit Data Management practices are only explored briefly as goals for the framework. |

| 4 | Practicality | The most practical guidance offered is for identifying risks over the data lifecycle as part of a preliminary context-based based analysis for which there is a step-by-step template (e.g., p. 106). Most of the guidance associated with Data Quality and Data Security practices isn't focused on actions. |

| Average score: 3.3 | ||

Supplementary Resources

- Human rights by design: explores the impact of AI on human rights and how organizations can implement 10 steps to protect human rights18

- Ad hoc Committee on Artificial Intelligence (CAHAI) meeting: exploration of AI's impact and how risks might be addressed19

Vendor-based Frameworks

Microsoft's Responsible AI Standards

Introduction

Microsoft released version 1 of its Responsible AI Standard in September 2019, followed by v2 in June 2022.20 Microsoft developed it as part of a multi-year effort to operationalize its six AI principles into concrete product development requirements. The standard was created in response to a recognized policy gap, where existing laws and norms had not kept pace with AI's unique risks and societal needs. Microsoft leveraged expertise from its research, policy, and engineering teams to develop this guidance.

Microsoft primarily designed the framework for its product development teams but is making it publicly available to share learnings and contribute to broader discussions about AI Governance. Various stakeholders, including system owners, developers, customer support teams, and organizations using Microsoft's AI systems, can use it.

Microsoft frames this as a "living document" that will continue to evolve based on new research, technologies, laws, and learnings from both inside and outside the company. They actively seek feedback through their ResponsibleAIQuestions portal and emphasize the importance of collaboration between industry, academia, civil society, and government to advance responsible AI practices.

Stated Aims

- To operationalize Microsoft's six AI principles

- To provide "concrete and actionable guidance" for product development teams

- To fill the "policy gap" where "laws and norms had not caught up with AI's unique risks or society's needs"

Content Summary

The "Microsoft Responsible AI Standard v2" translates Microsoft's six AI principles into concrete requirements, tools, and practices for product development teams.

The document consists of six core goal categories, each with detailed implementation requirements. The first section, Accountability Goals, focuses on impact assessment, oversight, and data governance, while subsequent sections address Transparency, Fairness, Reliability & Safety, Privacy & Security, and Inclusiveness.

Each goal category contains specific requirements that must be met. For example, Fairness has three major goals: Quality of Service refers to ensuring similar performance across demographic groups, Allocation of Resources and Opportunities focuses on minimizing disparate outcomes, and Minimization of Stereotyping aims to prevent harmful bias. For each goal, the document provides detailed requirements along with recommended tools and practices for implementation.

The framework places particular attention on accountability and documentation requirements, specifically identifying and mitigating risks before deployment and maintaining ongoing monitoring afterward. While some areas, such as security and privacy, aren't explicitly covered and instead reference separate Microsoft policies, other key risks are covered in moderately high detail.

Best Practice Highlights

| Table 4.13 – Best Practices (Microsoft's Responsible AI Standard) | ||

| Risk Mitigation | ||

| System intelligibility for decision-making (valid and reliable, explainable and interpretable, p. 9) Design the system, including, when possible, the system UX, features, reporting functions, and educational materials, so that stakeholders identified in requirement T1.1 can:

| ||

| Quality of service (accountable and transparent, fair, privacy-enhanced, p. 13) Publish information for customers about:

| ||

| Failures and remediations (secure and resilient, safe, p. 23) For each case of a predictable failure likely to have an adverse impact on a stakeholder, document the failure management approach:

| ||

| Data Management | ||

| Data Governance and Management (Data Governance, p. 7) Microsoft AI systems are subject to appropriate Data Governance and Management practices.

| ||

Assessment Results

| Table 4.14 – Risk Mitigation (Microsoft's Responsible AI Standards) | ||

| Rating | Criteria | Reasoning |

| 8.6 | Breadth |

|

| 7 | Depth | Several pages of instructions related to most risks covered, including several goals for which a series of requirements, tools, and practices are identified. However, much of this specifically relates to model training and testing rather than deployment or operation, and very little guidance is offered on understanding risks themselves. |

| 8 | Practicality | The format of guidance is nearly entirely practice-focused, with specific targets and actions being outlined in sequence. |

| Average score: 7.9 | ||

| Table 4.15 – Data Management (Microsoft's Responsible AI Standards) | ||

| Rating | Criteria | Reasoning |

| 1.8 | Breadth |

|

| 1 | Depth | Nearly all references to data explicitly relate to model training or testing. Some guidance on Data Governance is relevant across the AI lifecycle. |

| 3 | Practicality | The relevant guidance on Data Governance offers actionable, though brief, instructions on governing data across the AI lifecycle. |

| Average score: 1.9 | ||

Use Cases

Quisitive (a Microsoft partner) uses Microsoft's Responsible AI Standard to help client organizations implement ethical AI practices.

Supplementary Resources

- Microsoft Responsible AI Impact Assessment Guide: guidance on how to identify AI's impacts on an organization 21

- RAI Transparency report: shares Microsoft's governance processes for developing and deploying gen AI applications 22

- Microsoft's AI Safety Policies: AI Governance policies focused on AI safety prepared for the UK AI Safety Summit 23

AWS Cloud Adoption Framework for AI, ML, and Gen AI

Introduction

"AWS Cloud Adoption Framework for Artificial Intelligence, Machine Learning, and Generative AI (CAF-AI)" was published in February 2024 through a collaborative effort between multiple AWS entities, including the Generative AI Innovation Center, Professional Services, Machine Learning Solutions Lab, and other specialized teams.24

This framework provides organizations with a comprehensive mental model and prescriptive guidance for generating business value from AI technologies. It maps out the AI transformation journey, detailing how organizational capabilities mature over time and outlining the foundational capabilities needed to grow AI maturity. The framework is structured across six key perspectives – Business, People, Governance, Platform, Security, and Operations –each targeting specific stakeholder groups from C-suite executives to technical practitioners.

While primarily designed for organizations seeking to implement enterprise-level AI adoption beyond proof of concept, CAF-AI serves multiple purposes. It acts as both a starting point and ongoing guide for AI, ML, and generative AI initiatives, facilitates strategic discussions within teams and with AWS Partners, and helps organizations align their cloud and AI transformation journeys.

AWS designed CAF-AI to be dynamic and evolving, serving as a constantly growing index of considerations for enterprise AI adoption. As part of AWS's larger Cloud Adoption Framework, it enables organizations to think holistically about their cloud and AI transformations. AWS plans regular updates to reflect the rapidly evolving AI landscape.

Stated Aims

- Describe the journey businesses experience as their organizational capabilities on AI and ML mature

- Prescriptive guidance on the target state of foundational capabilities and how to evolve them step by step to generate business value along the way

- Inspire and inform customers' mid-term planning and strategy for AI Governance

Content Summary

The AWS CAF-AI is organized into two main components. First, it briefly presents the AI cloud transformation value chain, which explains how AI capabilities lead to business outcomes before outlining a four-stage AI transformation journey – Envision, Align, Launch, Scale – that organizations progress through iteratively.

Second, it details foundational capabilities across six key perspectives. The six perspectives forming the framework's core are:

- Business: ensures AI investments accelerate digital transformation and business outcomes

- People: bridges technology and business, evolving culture toward continual growth

- Governance: orchestrates AI initiatives while maximizing benefits and minimizing risks

- Platform: builds enterprise-grade, scalable cloud platforms for AI operations and development

- Security: achieves confidentiality, integrity, and availability of data and cloud workloads

- Operations: ensures AI services meet business needs and maintain reliability

Each perspective contains several foundational capabilities that are either enriched from the original AWS Cloud Adoption Framework or newly introduced for AI-specific needs. For example, new capabilities include ML Fluency (People perspective) and Responsible Use of AI (Governance perspective), while existing capabilities such as Data Protection (Security perspective) are enhanced with AI-specific considerations. The framework provides detailed guidance on implementing each capability, complete with best practices, considerations, and implementation steps.

Best Practice Highlights

| Table 4.16 – Best Practices (AWS Cloud Adoption Framework for AI, ML, and Gen AI) | ||

| Risk Mitigation | ||

| Security assurance (secure and resilient, privacy-enhanced, valid and reliable, p. 43) Continuously monitor and evaluate the three critical components of your workloads:

| ||

| Responsible use of AI (explainable and interpretable, fair, p. 28) Embed explainability by design into your AI lifecycle where possible and establish practices to recognize and discover both intended and unintended biases. Consider using the right tools to help you monitor the status quo and inform risk. Use best practices that enable a culture of responsible use of AI and build or use systems to enable your teams to inspect these factors. While this cost accumulates before the algorithms reach the production state, it pays off in the mid-term by mitigating damage. Especially when you are planning to build, tune, or use a foundation model, inform yourself about new emerging concerns such as hallucinations, copyright infringement, model data leakage, and model jailbreaks. Ask if and how the original vendor or supplier has taken an RAI approach to the development, as this trickles down directly into your business case. | ||

| Data Management | ||

| Data Engineering (Data Integration & Interoperability, Data Quality, Data Modeling & Design, p. 288) Automate data flows for AI development. As data is a first-level citizen of any AI strategy and development process, data engineering becomes significantly more important and cannot be an afterthought but a readily available capability within your organization and teams. As data is used to actively shape the behavior of an AI system, engineering it properly is decisive. Data preparation tools are an essential part of the development process. While the practice itself is not changing fundamentally, its importance and need for continuous evolution are rising. Consider integrating your data pipelines and practice directly into your AI development process and model training through streamlined and seamless pre-processing. Consider transitioning from traditional Extraction, Transformation, and Load (ETL) processes to a zero-ETL approach. With such an approach to data engineering, you reduce the friction between your data and AI practices. Enable and empower your AI team to combine data from multiple sources into a unified view as a self-service capability. Couple this with visualization tools and techniques that help your AI and data teams explore and understand their data visually. When possible, focus on making your data accurate, complete, and reliable. Design data models or transformations as part of your workflows, specifically for machine learning – normalized, consistent, and well-documented – to facilitate efficient data handling and processing. This significantly improves the performance of your AI applications and reduces friction in the development process. | ||

| Data Architecture (Data Architecture, p. 34) As data movement is becoming more important for AI systems, harden your architecture for data-movement requirements:

| ||

Assessment Results

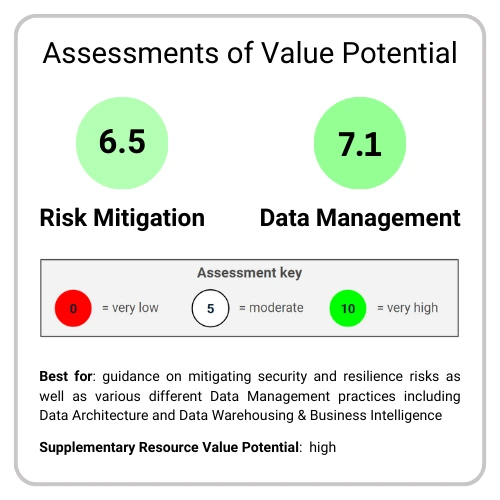

| Table 4.17 – Risk Mitigation (AWS Cloud Adoption Framework for AI, ML, and Gen AI) | ||

| Rating | Criteria | Reasoning |

| 8.6 | Breadth |

|

| 5 | Depth | Under the Security perspective, considerable detail is offered on various risks. However, this detail is dominantly relevant to model training. Outside of this, detailed coverage is very limited. |

| 6 | Practicality | Guidance under the Security perspective is highly practical, but, as corresponding with our assessment of depth, guidance outside of this is largely limited to basic references and descriptions. |

| Average score: 6.5 | ||

| Table 4.18 – Data Management (AWS Cloud Adoption Framework for AI, ML, and Gen AI) | ||

| Rating | Criteria | Reasoning |

| 7.3 | Breadth |

|

| 8 | Depth | Although the amount of detail offered on any one area of Data Management varies greatly, much of the content from the Governance, Platform, and Security sections explains how Data Management connects to AI with a high level of specificity. |

| 6 | Practicality | There's a mix of descriptive and procedural guidance. The document is not formatted with direct practical application in mind but nonetheless specifies many actions that can be taken. |

| Average score: 7.1 | ||

Supplementary Resources

- AWS Well-Architected Framework Machine Learning Lens: extensive guidance on different AI Governance best practices across the AI lifecycle25

- AWS' responsible use of machine learning: guidance on how to deploy and operate ML systems26

- AWS' AI Safety guidelines: AI Governance policies focused on AI safety prepared for the UK AI Safety Summit27

Google's Secure AI Framework Approach

Introduction

Google released the Secure AI Framework (SAIF) Approach in June 2023.28 The SAIF is "a conceptual framework for secure artificial intelligence (AI) systems" with strong influence from security best practices. Rather than the SAIF itself, this document offers guidance on how the SAIF may be applied while the SAIF itself isn't yet publicly accessible.

Google's SAIF covers several priority elements for each of its six core elements that are relevant to security and risk professionals and cross-functional teams, including business owners, technical practitioners, legal experts, and ethics specialists.

Google has committed to SAIF's ongoing development alongside customers, partners, industry stakeholders, and governments. Future development will include a deeper exploration of specific topics, aiming to enable secure-by-default AI advancement across the industry. Google has not yet indicated when it will publicly release SAIF.

Stated Aims

- Provide high-level practical considerations on how organizations could go about building the SAIF approach into their existing or new adoptions of AI

- Outlining the priority elements that need to be addressed under each of the SAIF's six core elements

Content Summary

Google's "Secure AI Framework Approach" translates security best practices into a practical implementation guide organized around six core elements, with an emphasis on integrating security considerations throughout the AI lifecycle.

The document is structured in four implementation steps followed by a detailed exploration of the six core elements. The initial three steps cover understanding AI use cases, assembling cross-functional teams, establishing baseline AI knowledge, and applying the framework elements.

The six core elements then address expanding security foundations, extending detection and response capabilities, automating defenses, harmonizing platform controls, adapting control mechanisms, and contextualizing AI system risks. Each core element contains specific, actionable guidance. For example, "Expand strong security foundations" covers Data Governance, supply chain management, security control adaptation, and talent retention.

The framework places particular attention on risk assessment and threat response, with specific emphasis on evolving AI security and resilience threats such as prompt injection and data poisoning. While the framework defers some technical details to future documentation, it comprehensively covers organizational, operational, and security considerations for AI implementation.

Best Practice Highlights

| Table 4.19 – Best Practices (Google's Secure AI Framework Approach) | ||

| Risk Mitigation | ||

| Extend detection and response (secure and resilient, p. 6) Develop an understanding of threats that matter for AI usage scenarios, the types of AI used, etc. Organizations that use AI systems must understand the threats relevant to their specific AI usage scenarios. This includes understanding the types of AI they use, the data they use to train AI systems, and the potential consequences of a security breach. By taking these steps, organizations can help protect their AI systems from attack. Prepare to respond to attacks against AI and issues raised by AI output. Organizations that use AI systems must have a plan for detecting and responding to security incidents and mitigating the risks of AI systems making harmful or biased decisions. By taking these steps, organizations can help protect their AI systems and users from harm. Specifically focusing on AI output for gen AI prepare to enforce content safety policies. Gen AI is a powerful tool for creating a variety of content, from text to images to videos. However, this power also comes with the potential for abuse. For example, gen AI could be used to create harmful content, such as hate speech or violent images. To mitigate these risks, it is important to prepare to enforce content safety policies. Adjust your abuse policy and incident response processes to AI-specific incident types, such as malicious content creation or AI privacy violations. As AI systems become more complex and pervasive, it is important to adjust your abuse policy to deal with abuse cases and adjust your incident response processes to account for AI-specific incident types. These types of incidents can include malicious content creation, AI privacy violations, AI bias, and general abuse of the system. | ||

| Adjust controls (secure and resilient, p. 8) Conduct Red Team exercises to improve safety and security for AI-powered products and capabilities. Red Team exercises are a security testing method where a team of ethical hackers attempts to exploit vulnerabilities in an organization's systems and applications. This can help organizations identify and mitigate security risks in their AI systems before malicious actors can exploit them. Stay on top of novel attacks, including prompt injection, data poisoning, and evasion attacks. These attacks can exploit vulnerabilities in AI systems to cause harm, such as leaking sensitive data, making incorrect predictions, or disrupting operations. By staying up-to-date on the latest attack methods, organizations can take steps to mitigate these risks. | ||

| Automate defenses (secure and resilient, p. 7) Identify the list of AI security capabilities focused on securing AI systems, training data pipelines, etc. AI security technologies can protect AI systems from a variety of threats, including data breaches, malicious content creation, and AI bias. Some of these technologies include traditional data encryption, access control, and auditing, which can be augmented with AI and newer technologies that can perform training data protection, as well as model protection. Use AI defenses to counter AI threats, but keep humans in the loop for decisions when necessary. AI can be used to detect and respond to AI threats, such as data breaches, malicious content creation, and AI bias. However, humans must remain in the loop for important decisions, such as determining what constitutes a threat and how to respond. This is because AI systems can be biased or make mistakes, and human oversight is necessary to ensure that AI systems are used ethically and responsibly. Use AI to automate time-consuming tasks, reduce toil, and speed up defensive mechanisms. Although it seems like a more simplistic point in light of the uses of AI, using AI to speed up time-consuming tasks will ultimately lead to faster outcomes. For example, it can be time-consuming to reverse engineer a malware binary. However, AI can quickly review the relevant code and provide an analyst with actionable information. Using this information, the analyst could then ask the system to generate a YARA rule looking for these actions. In this example, there is an immediate reduction of toil and faster output for the defensive posture. | ||

Assessment Results

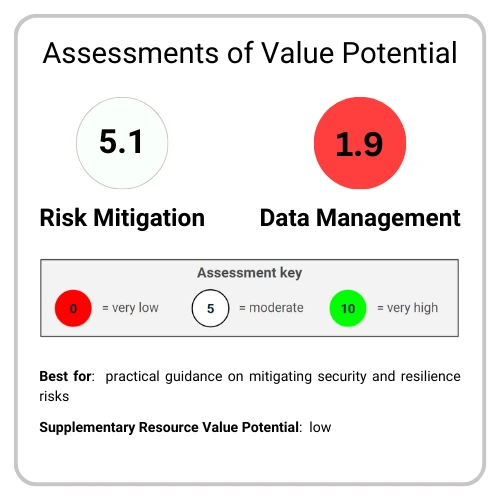

| Table 4.20 – Risk Mitigation (Google's Secure AI Framework Approach) | ||

| Rating | Criteria | Reasoning |

| 4.3 | Breadth |

|

| 5 | Depth | Guidance for secure and resilient AI is offered in moderate to high depth. Various other risks, however, are mentioned only as examples or in a single substantive sentence. |

| 6 | Practicality | The guidance related to secure and resilient AI is action-focused, but much of it is generic, and there's no clear sequence of actions. |

| Average score: 5.1 | ||

| Table 4.21 – Data Management (Google's Secure AI Framework Approach) | ||

| Rating | Criteria | Reasoning |

| 2.7 | Breadth |

|

| 2 | Depth | The primary guidance on Data Management relates to an overview of Data Governance for training data. Very limited guidance is otherwise offered. |

| 1 | Practicality | The guidance on Data Governance is largely descriptive. Only one action-based piece of guidance for Data Security is offered. |

| Average score: 1.9 | ||

Supplementary Resources

- AI principles: list of principles, best practices, and AI responsibility reports 29

- Google Deepmind's safety approach: AI Governance policies focused on AI safety prepared for the UK AI Safety Summit 30

Key Takeaways

| Table 4.22 – Assessments Comparison | ||||||

| Frameworks | Risk Mitigation | Data Management | ||||

| Breadth | Depth | Practicality | Breadth | Depth | Practicality | |

| OECD Framework for the Classification of AI Systems | 10 | 6 | 3 | 5.5 | 6 | 5 |

| 6.3 | 5.5 | |||||

| NIST AI Risk Management Framework | 10 | 9 | 8 | 1.8 | 2 | 2 |

| 9 | 1.9 | |||||

| Singapore Model AI Governance Framework | 7.1 | 5 | 6 | 0.9 | 5 | 3 |

| 6 | 3 | |||||

| The Alan Turing Institute's Assurance Framework for AI Systems | 10 | 6 | 6 | 2.9 | 3 | 4 |

| 7.3 | 3.3 | |||||

| Microsoft Responsible AI Standard, v2 | 8.6 | 7 | 8 | 1.8 | 1 | 3 |

| 7.9 | 1.9 | |||||

| AWS Cloud Adoption Framework for AI, ML, and Gen AI | 8.6 | 5 | 6 | 7.3 | 8 | 6 |

| 6.5 | 7.1 | |||||

| Google's Secure AI Framework Approach | 4.3 | 5 | 6 | 2.7 | 2 | 1 |

| 5.1 | 1.9 | |||||

| Table 4.23 – Best Practice Highlights | |

| Subcategories | Highlights |

| Risk Mitigation | |

| All areas |

|

| Secure and resilient |

|

| Explainable and interpretable |

|

| Valid and reliable |

|

| Accountable and transparent |

|

| Privacy-enhanced |

|

| Fair – with harmful bias managed |

|

| Safe |

|

| Data Management | |

| All areas |

|

| Data Quality |

|

| Data Security |

|

| Metadata |

|

| Data Governance |

|

| Data Integration & Interoperability |

|

| Data Modeling & Design |

|

| Data Architecture |

|

Discussion

The most evident pattern from our assessments is that leading public sector and vendor-based AI Governance frameworks disproportionately guide risk mitigation compared with Data Management. The mean overall risk mitigation rating for frameworks is 6.8, while the mean overall Data Management rating is 3.5.

This pattern holds across all three criteria, although there's more variance for risk mitigation guidance. The range in mean ratings between Data Management criteria was 0.6 between breadth (3.3) and depth (3.9), far more narrow than a range of 2.4 for risk mitigation, also between breadth (8.4) and depth (6) but with breadth being higher than depth, unlike for Data Management.

With a difference of 5.1, the difference in mean breadth between risk mitigation and Data Management guidance has an outsized impact on the variance in overall ratings. However, the difference in depth (2.1) and practicality (2.7) ratings remain moderately large, indicating that the difference in coverage of risk mitigation and Data Management guidance is likely informative about what businesses should expect from these frameworks and isn't simply the result of variance in the breadth of subcategories covered.

Looking at the aggregated highlights by subcategory, we can see that the average number of relevant highlights per subcategory is far higher for risk mitigation (3.6) than for Data Management (1.6). Indeed, half of DAMA's DMBOK Data Management practice areas aren't featured at all, and of those that do, half of the highlights recorded relate to data quality.31 Highlights were selected without regard for the subcategories they related to.

Although the sample size is very small and the number of practices highlighted per framework varies, the skew in our highlights towards data quality guidance is likely representative of the concentrated relevance of a small number of data practices to AI Governance, including data quality. This difference, on top of the large difference in breadth ratings between best practice categories, might indicate how limited a benchmark the Data Management subcategories from the DMBOK are for fairly rating the breadth of relevant data practices AI Governance frameworks provide guidance on.

The possibility that there is only a limited set of Data Management practices, alongside the centrality of risk mitigation to AI Governance as a whole, might explain the large differences in breadth, depth, and practicality assessments between best practice categories. However, it's important to note that the range of best practice categories relevant to effective AI Governance is wider than just risk mitigation and Data Management.

For example, guidance on operational tasks and how frameworks may be practically implemented are each essential to a successful AI Governance framework. However, as discussed across our Limitations and Methodology sections, measuring each of these ended up outside this project's scope. Let alone limitations in our assessments of risk mitigation and Data Management guidance, our assessments thus also do not reflect the full extent of value businesses could gain from investigating them further.

Overall, our framework profiles reveal the value propositions of each framework for two key AI Governance best practice categories, in particular, the generally greater breadth, depth, and practicality of risk mitigation guidance over Data Management guidance. However, limitations, including how the breadth of Data Management guidance was measured and the breadth of AI Governance best practice categories assessed, mean that each framework's overall general value proposition may be far higher than our assessments indicate. Each business will gain more or less value from guidance from certain organizations or on certain best practice categories depending on its objectives.

Conclusion

Our assessment of seven leading AI governance frameworks reveals a clear pattern: while frameworks generally provide robust guidance on risk mitigation, they offer substantially less comprehensive coverage of Data Management practices. This imbalance suggests that organizations may need to combine insights from multiple frameworks to build a comprehensive AI Governance approach.

For businesses prioritizing risk mitigation, NIST's AI RMF stands out as particularly valuable, offering extensive context and step-by-step implementation guidance.3 Among AI-using companies that responded to IAPP-EY's 2023 survey, 42% use the NIST AI Risk Management Framework (AI RMF).20 Meanwhile, AWS's framework provides the strongest Data Management guidance, particularly when supplemented with their Well-Architected Framework Machine Learning Lens and paired with OECD's framework for data quality practices.8, 24, 25

The varying strengths of these frameworks highlight that no single solution will meet all organizational needs. Instead, businesses should approach AI Governance framework selection based on their specific objectives, risk profiles, and operational contexts. For example, some businesses that prioritize mitigating ethical concerns and human rights impacts may draw from frameworks like The Alan Turing Institute's HUDERAF, while others primarily concerned with security risks might benefit more from Google's SAIF Approach.17, 28

Businesses will approach using AI Governance frameworks differently. While some will directly apply one specific framework, others will use multiple frameworks for different purposes or internally develop their own framework. IAPP and EY survey results from early 2023 indicate how the global region a business is based in influences their approach to implementing AI Governance programs.6

For example, the most common approach in North America is to use the US-created NIST AI RMF (50%) while Asian businesses mainly use internally developed AI Governance frameworks (57%). By contrast, a majority of EU businesses don't even use a specific AI Governance framework and instead use existing regulation such as the GDPR to guide their AI Governance programs (57%).6

The diversity of approaches businesses take to using AI Governance frameworks indicates that there's currently no general consensus on the best approach to ensuring AI Systems are deployed safely to maximize business benefit. Businesses should consider how their objectives and resource capacity align with different approaches to select the best-fit approach.

Endnotes

Mac Jordan

Data Strategy Professionals Research Specialist